Transform your Windows PC into a private AI powerhouse by running ChatGPT-level language models completely offline. LM Studio eliminates the need for cloud services while giving you unlimited access to cutting-edge LLMs without subscription fees or data privacy concerns.

What Makes LM Studio Essential for LLM Enthusiasts

LM Studio stands out as the premier desktop application for running Large Language Models locally on Windows, Mac, and Linux systems. Unlike complex command-line tools that demand technical expertise, LM Studio provides an intuitive graphical interface that makes advanced AI accessible to both beginners and experienced developers.

The platform bridges the gap between sophisticated AI technology and practical everyday use, offering a ChatGPT-like experience without relying on external cloud services. For LLM enthusiasts, this means complete control over model selection, parameter tuning, and conversation privacy.

Why Local LLM Deployment Matters

Running LLMs locally through LM Studio offers compelling advantages for AI practitioners:

- Absolute Privacy: Your conversations and data never leave your computer

- Zero Internet Dependency: Run models completely offline after initial download

- Cost-Free Operation: No subscription fees, API costs, or token limits

- Unlimited Experimentation: Test different models and configurations without restrictions

- Enterprise Security: Perfect for sensitive business applications requiring data isolation

- Model Flexibility: Access thousands of models from Hugging Face’s repository

- Custom Configuration: Fine-tune model behavior, chat settings, and system prompts

System Requirements for Optimal LLM Performance

Before diving into installation, ensure your Windows machine meets these specifications for smooth LLM operation:

Minimum Configuration:

- Windows 10 or 11 (64-bit architecture)

- AVX2-compatible processor (standard on modern CPUs)

- 8GB RAM (though 16GB strongly recommended)

- 10-50GB free storage (varies by model size)

- Administrator privileges for installation

Recommended Setup for Serious LLM Work:

- 16GB+ RAM for handling larger models

- SSD storage with 100GB+ free space for faster model loading

- NVIDIA GPU with CUDA support for accelerated inference

- High-speed internet for initial model downloads

Pro Tip: Verify AVX2 support by opening System Information (msinfo32) and checking your processor specifications against Intel ARK or AMD’s documentation.

Step-by-Step LM Studio Installation

Download and Initial Setup

Getting LM Studio running on your Windows system takes just minutes:

- Navigate to the official LM Studio website at

https://lmstudio.ai/ - Click the Windows download button to get the ~402MB installer

- Right-click the downloaded file and select “Run as administrator”

- The installer launches automatically without complex configuration wizards

- Select your installation directory (choose a drive with ample free space)

- Complete the installation process, which automatically configures LM Studio as a background service

First Launch Configuration

Upon launching LM Studio, you’ll encounter a clean interface with four main tabs: Discover, Chat, My Models, and Developer. Before downloading your first model, configure the storage location to optimize disk space usage.

Setting Up Model Storage:

- Navigate to the “My Models” tab

- Click the three-dots menu and select “Change” next to the directory path

- Choose a drive with substantial free space (external drives work well)

- Create a dedicated folder like “LM Studio Models”

- Confirm the new storage location

Selecting and Downloading Your First LLM

The model selection process significantly impacts your LM Studio experience. LM Studio’s “Discover” tab showcases recommended models highlighted in purple, with search and filtering capabilities for finding specific architectures.

Model Selection Strategy by Use Case

| Model Type | RAM Requirement | Best Applications | Example Models |

|---|---|---|---|

| 3B Parameters | 8-16GB | Learning, quick responses | Llama 3.2 3B |

| 7-8B Parameters | 16GB+ | General purpose, balanced performance | Llama 3.1 8B, Mistral 7B |

| 13B+ Parameters | 24GB+ | Advanced reasoning, complex tasks | CodeLlama 13B |

| Specialized Models | Varies | Domain-specific tasks | DeepSeek Coder |

Download Process

- Browse the Discover tab or search for specific models

- Review model descriptions, sizes, and quantization options

- Check system compatibility warnings

- Click the green download button

- Monitor download progress (models range from 2-50GB)

- Verify successful download in the “My Models” tab

Running Your First Local LLM Session

With your model downloaded, experience the power of local AI inference:

Loading and Initializing Models

- Switch to the “Chat” tab

- Click “Select a model to load” at the interface top

- Choose your downloaded model from the available list

- Wait 30-60 seconds for model loading into system memory

- Confirm successful loading by checking the displayed model name

Optimizing Model Parameters

Fine-tune your LLM’s behavior through LM Studio’s parameter controls:

- Temperature: Adjust creativity levels (0.1 for focused responses, 1.0 for creative output)

- Top-p: Control response diversity and coherence

- Max Tokens: Set response length boundaries

- Context Length: Define conversation memory span

- System Prompts: Establish AI personality and behavioral guidelines

Advanced Features for LLM Developers

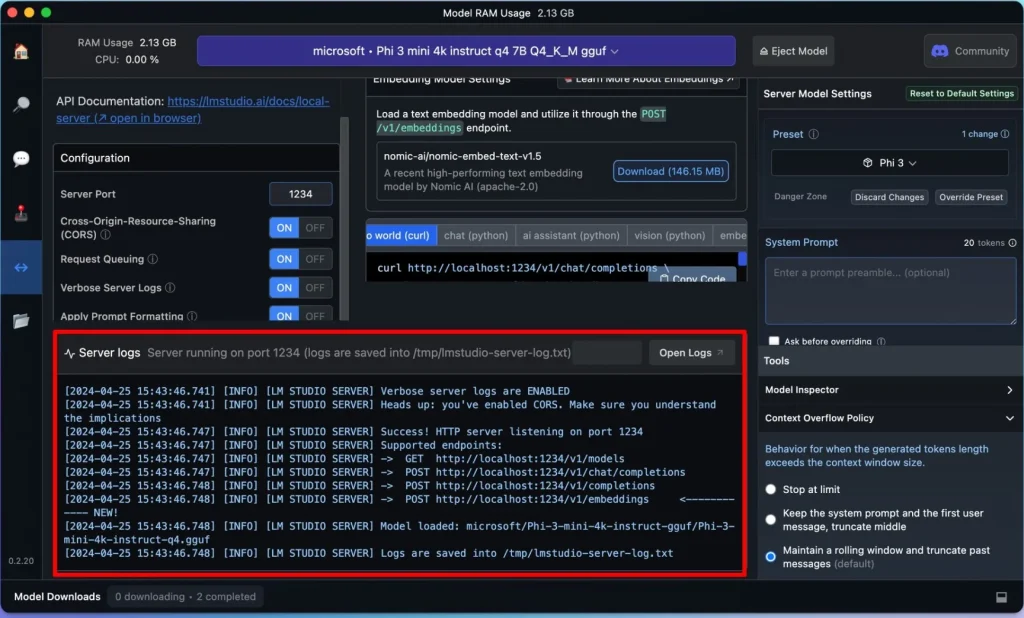

Local API Server Setup

LM Studio transforms your local models into API-accessible services, enabling integration with external applications:

- Navigate to the “Developer” tab

- Toggle the status from “Stopped” to “Running”

- Configure server settings, including CORS enablement

- Access your models via

http://127.0.0.1:1234 - Test functionality by visiting

http://127.0.0.1:1234/v1/models

Multi-Model Management

Efficiently manage multiple LLMs for different specialized tasks:

- Download models optimized for specific domains (coding, writing, analysis)

- Switch between models instantly through the chat interface

- Compare responses across different architectures

- Maintain collections of specialized models for various projects

Troubleshooting Common LLM Deployment Issues

Performance Optimization:

- Start with smaller models and gradually scale up

- Close resource-intensive applications during LLM operation

- Use SSD storage for faster model loading

- Monitor system resources to prevent memory bottlenecks

Model Loading Problems:

- Verify sufficient RAM for your chosen model size

- Check download integrity and re-download if necessary

- Ensure stable internet connection during initial downloads

Security and Privacy Advantages

Local LLM deployment through LM Studio provides enterprise-grade privacy benefits crucial for sensitive applications:

- Data Sovereignty: All conversations remain on your local system

- Offline Operation: Complete functionality without internet connectivity

- Zero Telemetry: No external data collection or tracking

- Compliance Ready: Meets strict data protection requirements

- Unlimited Usage: No rate limiting or usage monitoring

Maximizing Your Local LLM Setup

Best Practices for LLM Enthusiasts:

- Experiment with different quantization levels for performance optimization

- Create specialized system prompts for different use cases

- Maintain a diverse model library for various applications

- Regular updates ensure access to latest model releases and features

- Community engagement provides insights into optimal configurations

Future-Proofing Your Installation:

- LM Studio receives regular updates with new features and model support

- Growing ecosystem of specialized models for emerging domains

- API compatibility enables integration with custom applications

- Community-driven development ensures continued innovation

Conclusion: Unleashing Local LLM Power

LM Studio democratizes access to powerful language models, enabling AI enthusiasts to run sophisticated LLMs locally with complete privacy and unlimited usage. This comprehensive installation guide provides everything needed to transform your Windows PC into a private AI workstation capable of running ChatGPT-level models entirely offline.

Whether you’re developing AI applications, conducting research, or simply exploring the capabilities of modern language models, LM Studio offers the perfect platform for local LLM deployment. Start with smaller models to familiarize yourself with the interface, then gradually explore more powerful architectures as your confidence and system resources allow.

The future of AI computing lies in local deployment, and LM Studio positions you at the forefront of this revolution. Download LM Studio today and experience the freedom of running cutting-edge language models entirely on your own terms.

You must be logged in to post a comment.